Why “Thwart” Changes Everything: Cybersecurity in the AI Era Is Built at the Intersections

In more than two decades working in cybersecurity, I can confidently say I have never seen a transformation as profound, fast, and disruptive as the one artificial intelligence is driving right now. We have lived through the rise of cloud, the explosion of ransomware, the shift to zero trust, and the constant evolution of threat actors, but AI is fundamentally different. It is not just a new attack technique or a new technology stack. It is becoming the decision engine behind businesses, operations, customer experiences, software development, and increasingly, both offense and defense in cybersecurity itself. The speed, scale, and autonomy that AI introduces are changing the nature of risk in ways that traditional security models were never designed to handle. This is why the recent draft guidelines released by the National Institute of Standards and Technology (NIST) to rethink cybersecurity for the AI era feel like a turning point rather than just another framework update.

Those who have followed my previous articles already know that I am a strong encourager, advocate, and long-time believer in the NIST Cybersecurity Framework (CSF) as one of the most practical, real-world, and business-aligned approaches to cybersecurity. Over the years, when speaking with CISOs, board members, and business decision makers across industries, I consistently recommend using NIST CSF as the backbone of their cybersecurity strategy because it reflects how security actually works in complex organizations, not how vendors wish it worked in product brochures. And when CSF 2.0 introduced Governance as a formal core function, I truly believe it became the biggest innovation in cybersecurity frameworks in decades. That single addition finally anchored cybersecurity where it has always belonged: in enterprise risk management, strategic decision-making, accountability, and business impact.

In the AI era, this governance-driven approach is no longer optional, it is essential. AI systems influence revenue, automate decisions, optimize operations, and increasingly act with minimal human intervention. A compromised model, manipulated data set, or abused AI pipeline is not just a technical incident, it is a business failure with financial, operational, regulatory, and reputational consequences. That is why seeing NIST build the new Cyber AI Profile directly on CSF 2.0, with governance and cyber risk management at its core, is so important. It acknowledges that securing AI is not about adding another security tool, but about managing intelligent risk across the entire organization. The new draft, formally titled the Cybersecurity Framework Profile for Artificial Intelligence (NISTIR 8596), is designed to help organizations accelerate the secure adoption of AI while addressing the emerging cybersecurity threats that come with its rapid advance. It recognizes that every organization, regardless of where it is on its AI journey, must adapt its cybersecurity strategy to a reality where AI is deeply embedded in digital ecosystems and simultaneously being weaponized by adversaries. What makes this work especially powerful is that it does not treat AI security as a niche technical domain. Instead, it frames it as an evolution of enterprise cybersecurity itself, grounded in real-world risk, operational resilience, and governance-driven strategy.

Why the Word “Thwart” Changes the Entire Cybersecurity Conversation

One of the most deliberate and strategically meaningful choices made by the NIST in the Cyber AI Profile is the use of the word thwart instead of relying solely on the traditional cybersecurity language of detect and respond. For decades, our industry has organized security programs around finding intrusions after they occur and reacting quickly enough to limit damage. Detection and response have become the core promises of countless technologies, operating models, and SOC transformations. But AI fundamentally breaks the assumptions behind that approach. When attacks are automated, adaptive, and operating at machine speed, detecting something milliseconds faster does not necessarily change outcomes in a meaningful way. By the time a human or even an automated workflow responds, an AI-driven campaign may already have escalated privileges, moved laterally, manipulated systems, and achieved its objectives.

The word thwart carries a very different strategic meaning. To thwart is not simply to notice something happening and react to it. To thwart means to actively frustrate a plan in motion, to interfere with an adversary’s objectives, and to deny success even when an attempt is already underway. As a verb, it implies anticipation, disruption, resistance, and continuous contest. It assumes intelligent opponents who adapt, learn, and optimize their tactics, exactly the reality of AI-powered threat actors. By choosing this word, NIST is subtly but powerfully reframing cybersecurity away from reactive incident handling and toward proactive disruption of attacker advantage. In practical terms, thwarting is about shaping the environment so attacks cannot scale, cannot achieve impact, and cannot convert access into meaningful harm. It means disrupting automated exploitation loops, degrading the effectiveness of malicious AI models, breaking attacker feedback mechanisms, embedding friction into digital systems, and limiting the ability of adversaries to move quickly and invisibly. Where detection focuses on visibility and response focuses on cleanup, thwarting focuses on outcome denial. An intrusion that cannot manipulate AI models, cannot automate lateral movement, cannot exfiltrate valuable data, and cannot disrupt business operations has effectively failed, even if technical access occurred.

This is why the shift from detect and respond to thwart is so critical in the AI era. AI-driven attacks are not single events, they are continuous adaptive processes. They probe defenses, learn from failures, optimize success paths, and iterate faster than any human-driven security team can track manually. A purely reactive model will always be playing catch-up. Thwarting, by contrast, forces organizations to think in terms of adversarial behavior, attacker economics, and systemic resilience. It aligns cybersecurity with strategic risk management rather than operational firefighting. There is also a deeper business implication embedded in this language choice. Detection and response tend to measure success in technical terms such as dwell time, mean time to respond, or number of incidents closed. Thwarting measures success in business outcomes such as prevented impact, preserved operations, protected revenue, and sustained trust. It naturally connects cybersecurity to enterprise risk management, executive decision-making, and resilience metrics. In a world where AI systems increasingly drive business value, preventing meaningful harm becomes far more important than chasing perfect prevention.

Ultimately, the adoption of the word thwart reflects a recognition that cybersecurity in the AI era is an intelligent contest, not a static control problem. It acknowledges that some level of intrusion or interaction with adversaries may be inevitable in complex digital ecosystems, especially those built on interconnected AI supply chains and automated systems. What matters is whether attackers can achieve their goals. Thwarting shifts the objective of cybersecurity from trying to stop every breach to consistently denying adversaries strategic success. In many ways, this single word captures the future of cyber defense. Not faster alerts. Not bigger SOCs. Not more tools. But smarter, risk-driven, adaptive security architectures designed to continuously disrupt attacker advantage in an AI-powered threat landscape.

Why Thwarting Becomes Essential in an Era of AI-Agent Orchestrated and Industrialized Attacks

One of the deepest reasons the concept of thwarting is so critical in the new AI cybersecurity model championed by NIST is that cyberattacks are no longer handcrafted, episodic, or limited by human speed. We are rapidly entering an era of AI-agent orchestrated threats, where autonomous systems continuously scan environments, identify weaknesses, adapt techniques, launch coordinated exploitation chains, and optimize themselves in real time. These are not single intrusions but industrialized attack operations, running like factories, where reconnaissance, social engineering, vulnerability discovery, exploitation, lateral movement, and data exfiltration are all automated and intelligently linked. In this world, attackers do not wait for defenders to react. They iterate faster than human-driven security operations can keep up, learning from every blocked attempt and immediately shifting tactics until a path to impact is found.

Traditional cybersecurity models built around detection and response were designed for a slower threat landscape, one where humans planned attacks, executed them manually, and left observable gaps between phases. AI-orchestrated attacks remove those gaps. When a malicious agent can probe thousands of assets per minute, generate tailored phishing content at scale, adapt payloads dynamically, and coordinate multi-vector attacks across cloud, endpoint, identity, and AI systems simultaneously, simply detecting activity and responding after the fact becomes structurally insufficient. By the time a response workflow is triggered, the attack may have already evolved through multiple phases and achieved its objective. This is exactly why NIST’s shift toward the concept of thwarting is not semantic, it is strategic. Thwarting assumes that attacks will be continuous, adaptive, and autonomous. Instead of waiting to observe compromise and then reacting, thwarting focuses on actively breaking attacker momentum, denying automation advantages, and preventing the conversion of access into meaningful impact. In an industrialized attack model, adversaries depend on speed, scalability, and clean feedback loops to optimize their campaigns. Thwarting works by introducing friction into those loops. It disrupts automated reconnaissance with deception and dynamic environments, degrades exploitation success through adaptive controls, limits lateral movement with resilient architectures, constrains privilege escalation, and blocks the ability to monetize or operationalize access. When attackers can no longer scale efficiently, learn quickly, or reliably achieve outcomes, their entire economic model collapses.

This is especially important in AI-agent ecosystems, where multiple malicious agents may coordinate like a distributed system. One agent may specialize in reconnaissance, another in phishing, another in vulnerability exploitation, another in persistence, and another in data extraction. Together they form an autonomous attack pipeline. Detection might catch individual actions, but thwarting targets the system of attack itself. It is about breaking orchestration, disrupting coordination, and preventing the attack chain from completing its business objective. In this sense, thwarting is systems thinking applied to cyber defense, not event handling. There is also a profound risk management implication here. Industrialized AI attacks aim for scale, not just individual compromise. They seek systemic weaknesses that can be exploited repeatedly across thousands of organizations, cloud environments, and digital ecosystems. Thwarting therefore becomes a resilience strategy at both organizational and ecosystem levels. It is about preventing cascading failures, large-scale exploitation waves, and automated compromise campaigns from turning into widespread business disruption. This aligns directly with cyber risk management, where the goal is not simply reducing alerts but limiting systemic impact. Ultimately, in a world of AI-agent orchestrated threats, cybersecurity can no longer be a race to detect faster or respond quicker. It must become an active discipline of disruption, denial, and resilience engineering. Thwarting is the concept that captures this new reality. It recognizes that attackers now operate like autonomous digital factories, and the only sustainable defense is to consistently break their ability to scale, adapt, and succeed. This is why thwart is not just a new word in a framework. It is the defining objective of cybersecurity in the age of industrialized AI attacks.

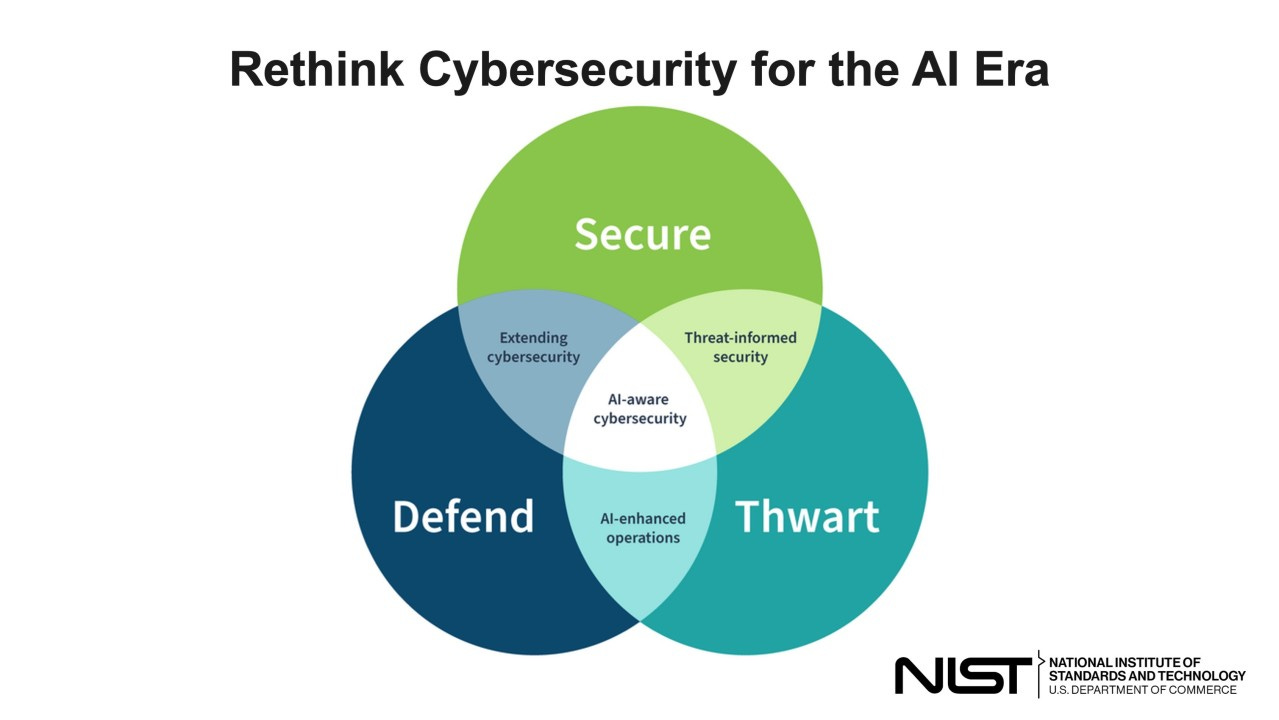

The Power of the Venn Diagram: Making AI-Aware Cybersecurity Visually Real

One of the reasons I immediately connected with the Cyber AI Profile released by NIST is the way it uses a Venn diagram to explain something that is strategically complex in a visually intuitive way. I have always loved Venn diagrams because they reflect how cyber risk works in the real world, not in silos, not in linear processes, but as overlapping forces that influence each other continuously. My own visual definitions of cyber risk have long relied on intersections between technology, threats, business impact, and governance, so seeing AI cybersecurity framed through these overlapping domains feels both practical and deeply aligned with reality. Below is how each area of the diagram comes to life, and why every intersection matters in the AI era.

Secure: Protecting AI as Critical Digital Infrastructure

The Secure circle represents the foundational responsibility of protecting AI systems themselves. This is where organizations focus on safeguarding training data, machine learning models, algorithms, deployment pipelines, APIs, and the fast-growing AI supply chain of open-source libraries and third-party services. AI introduces entirely new attack surfaces where adversaries can poison data to manipulate outcomes, steal intellectual property embedded in models, insert hidden backdoors, or exploit vulnerabilities in supporting frameworks. Securing AI is no longer just an extension of application security, it is about protecting the digital brain that increasingly drives business decisions. Without this layer, every AI-driven process becomes a potential risk amplifier.

Defend: Using AI to Strengthen Cybersecurity Operations

The Defend circle reflects how AI can be leveraged to enhance cybersecurity itself. Modern environments generate massive volumes of telemetry that humans simply cannot analyze in real time. AI enables advanced threat detection, behavioral analytics, automated investigation, intelligent prioritization, and rapid response at machine speed. This is where security operations become scalable, adaptive, and capable of keeping up with automated adversaries. But it also requires governance and trust, ensuring models are reliable, transparent, and aligned with organizational risk objectives. Defending with AI is not about replacing humans, it is about augmenting them so cybersecurity can operate effectively in hyper-complex digital ecosystems.

Thwart: Proactively Disrupting AI-Enabled Attacks

The Thwart circle is the most transformative concept in the framework. It goes beyond detection and response and focuses on actively denying attackers success. Thwarting means anticipating how adversaries use AI, disrupting automated attack chains, degrading malicious model effectiveness, limiting feedback loops that allow attackers to learn quickly, and embedding friction into digital systems so attacks cannot scale or achieve meaningful impact. It accepts that in complex environments some level of intrusion may happen, but it ensures those attempts fail to translate into business harm. This is resilience thinking applied to intelligent threats.

Secure + Defend: Extending Cybersecurity Into the AI Ecosystem

Where Secure and Defend overlap is the space of extending cybersecurity into AI systems themselves. Here, organizations not only protect AI assets but continuously monitor and defend them using intelligent analytics. Model behavior is assessed for anomalies, data pipelines are validated for integrity, and AI environments are defended dynamically rather than through static controls. This is where AI security becomes adaptive, constantly learning and adjusting to new risk patterns instead of relying on periodic audits or one-time protections.

Secure + Thwart: Threat-Informed AI Security

The overlap between Secure and Thwart represents threat-informed security specifically tailored to AI risks. Instead of generic controls, defenses are designed based on how adversaries actually attack AI systems. This includes preparing for model poisoning, prompt injection, AI supply-chain compromise, adversarial inputs, and automated exploitation. Security architecture in this zone is built around attacker behavior and real-world threat scenarios, not just compliance requirements. It is where organizations proactively close the pathways that AI-driven attackers rely on to succeed.

Defend + Thwart: AI-Enhanced Cyber Operations

Where Defend and Thwart intersect is the realm of AI-enhanced operations that actively disrupt adversaries. This includes automated containment, intelligent deception, adaptive response playbooks, real-time attack disruption, and systems that learn from attacker behavior to continuously improve defenses. It is where defenders begin to truly compete at the same speed and scale as AI-powered threats. Instead of reacting after damage begins, security operations interfere with attacks as they unfold and prevent them from achieving their objectives.

The Center: AI-Aware Cybersecurity

At the very center of all three circles sits AI-aware cybersecurity, the future state the framework is guiding organizations toward. This is where AI systems are secured, AI is leveraged defensively, and AI-enabled threats are proactively thwarted under strong governance and cyber risk management. It reflects a cybersecurity posture built for intelligent systems operating in contested environments, where resilience, adaptability, and business impact prevention matter more than simply stopping individual incidents.

Human in the Loop: Trust, Governance, and Control in an AI-Driven Cyber Battlefield

As cybersecurity evolves toward AI-enabled defense and proactive thwarting of industrialized attacks, one principle becomes more important than ever: keeping humans in the loop. The shift toward intelligent automation does not mean removing human judgment from security operations. On the contrary, in an era of autonomous AI agents operating at machine speed on both sides of the battlefield, human oversight becomes the anchor of trust, accountability, and strategic risk management. This is where the Cyber AI Profile from National Institute of Standards and Technology aligns perfectly with the broader evolution of cybersecurity toward governance and cyber risk management rather than pure technical reaction. AI can analyze vast data sets, correlate complex patterns, disrupt automated attacks, and operate continuously without fatigue, but it does not inherently understand business context, risk tolerance, regulatory exposure, or strategic priorities. Humans do. When AI systems are allowed to act without guardrails, organizations risk trading cyber threats for operational, legal, and ethical failures. A fully autonomous response that shuts down critical systems, blocks business processes, or disrupts customer operations may technically stop an attack while simultaneously creating massive business impact. Human in the loop ensures that automated security actions are aligned with real-world consequences and enterprise risk appetite.

In the context of thwarting AI-enabled attacks, human oversight plays an even more strategic role. Thwarting is not simply about blocking technical indicators. It involves shaping defensive environments, deciding where friction should be introduced, determining acceptable tradeoffs between security and usability, and continuously adjusting strategy as adversaries evolve. AI can execute disruption tactics at speed, but humans must define the objectives, boundaries, and escalation logic that ensure resilience rather than chaos. This turns cybersecurity from an automated reaction engine into an intelligent, governed risk control system. Human in the loop also directly addresses the trust gap that many organizations feel toward automation. While security teams increasingly want AI-driven remediation and disruption, most are not ready to hand over full control without transparency and validation. Keeping humans in the loop allows AI systems to propose actions, simulate outcomes, justify decisions, and learn from human feedback over time. This builds confidence gradually, enabling organizations to move from assisted automation to conditional autonomy and eventually to highly trusted autonomous defense where appropriate. Trust is not created by removing people. It is created by showing reliability, explainability, and consistent positive outcomes.

From a governance perspective, human in the loop becomes a core cyber risk control. It ensures accountability for automated decisions, supports compliance with regulatory frameworks, and aligns cybersecurity actions with executive oversight. In an AI-driven enterprise, every automated security response is effectively a business decision. Whether isolating a system, blocking a user, disrupting a workflow, or neutralizing an AI process, these actions have operational and financial consequences. Human oversight ensures those consequences are intentional, measured, and aligned with organizational priorities. Ultimately, the combination of AI-enabled defense, proactive thwarting, and strong human governance is what transforms cybersecurity into true resilience. AI provides speed, scale, and adaptability. Humans provide judgment, strategy, context, and accountability. Together, they create a security posture capable of surviving in an environment of industrialized AI attacks without sacrificing trust, control, or business continuity. In the AI era, the future of cybersecurity is not human versus machine. It is human guided intelligence versus adversarial intelligence. And keeping humans in the loop is what ensures that power is used wisely, strategically, and in service of real risk reduction.

Resilience Lives in the Intersections

This Venn diagram captures a fundamental truth about cybersecurity in the AI era: it is no longer a linear sequence of controls or a checklist of activities, but a living ecosystem of overlapping capabilities that must operate together. Securing AI systems without defending with AI leaves organizations perpetually behind adversaries who move at machine speed. Defending with AI without first hardening AI itself builds sophisticated security operations on fragile and easily manipulated foundations. And continuing to rely primarily on detection and response, without deliberately engineering for thwarting, ensures that defenders remain in a constant race after impact has already begun, reacting to automated attackers rather than denying them success.

In the age of AI, true cyber resilience is not found in individual controls or isolated functions. It lives in the intersections, where security, intelligence, disruption, and governance converge to consistently prevent meaningful harm.

NIST (2025). Cybersecurity Framework Profile for Artificial Intelligence (Cyber AI Profile) https://www.nist.gov/news-events/news/2025/12/draft-nist-guidelines-rethink-cybersecurity-ai-era DOI:10.6028/NIST.IR.8596.iprd

Castro, J. (2024). Integrating NIST CSF 2.0 with the SOC-CROC Framework: A Comprehensive Approach to Cyber Risk Management. ResearchGate. https://www.researchgate.net/publication/388493049 DOI:10.13140/RG.2.2.13387.50720/1

Castro, J. (2025). What Is Governance in Cybersecurity?. ResearchGate. https://www.researchgate.net/publication/393065290 DOI:10.13140/RG.2.2.30988.63360

Castro, J. (2025). Cyber Risk and Exposure: Where Risk Meets Reality. ResearchGate. https://www.researchgate.net/publication/395107652 DOI:10.13140/RG.2.2.12965.56804

Didn't expect to feel this energized after reading about cybersecurity frameworks, but you really articulated the scale of the AI shift so well. That line about how NIST CSF 'reflects how security actually works... not how vendors wish it worked' hit different; it's a refreshing dose of reality in a field that often feels like marketing jargon.