Understanding the AI Shared Responsibility Model: A Comprehensive Framework for Security and Risk Management Across AI Service Models

When I first began working deeply with cloud security over a decade ago, one insight completely reshaped how I approached technology risk: never assume the provider is doing more than they actually are. In almost every early conversation with enterprises, teams believed that by “moving to the cloud,” security, compliance, governance, and even operational controls were automatically taken care of because the provider offered them as part of the service. But the reality was very different. The provider handled the security of the cloud, physical infrastructure, hypervisors, global availability, while customers were fully responsible for the security in the cloud: identity, access, data governance, logging, configuration, and everything users touched.

That lesson became the foundation of my thinking: the questions you ask determine the risks you uncover. And when the industry began adopting AI at scale, I immediately saw the same assumptions reappearing, but with far greater consequences. Teams assumed the AI provider handled safe model behavior, ethical constraints, data filtering, output control, and operational security. They assumed adversarial protections were already built in. They assumed training data was secure and unbiased. They assumed autonomy was safe. They assumed that prompt injection, model poisoning, and deepfake risks were somehow “built into the service” and therefore handled automatically. None of this was true.

AI does not simplify responsibility, it multiplies it. Its complexity, adaptability, and autonomy introduce entirely new categories of risk that do not exist in traditional software or cloud systems. The more I worked with leaders, the clearer it became: organizations needed a model that made AI risk understandable, actionable, and structured. The AI Shared Responsibility Model grew directly from this experience and from the urgent need to help decision makers understand responsibility boundaries without requiring deep AI or ML expertise.

The Strategic Conversation

As organizations accelerate their adoption of artificial intelligence across business functions, the division of security, governance, and operational responsibilities between AI service providers and customers has become a critical topic. Unlike traditional software systems, AI introduces dynamic risks, models adapt, outputs vary, data sources shift, and autonomy expands the attack surface in unpredictable ways. This complexity requires a structured approach to defining who is responsible for what. The AI Shared Responsibility Model provides precisely that structure, offering a comprehensive framework that maps responsibilities across different AI deployment types, from fully managed SaaS AI solutions to deeply controlled on-premises implementations.

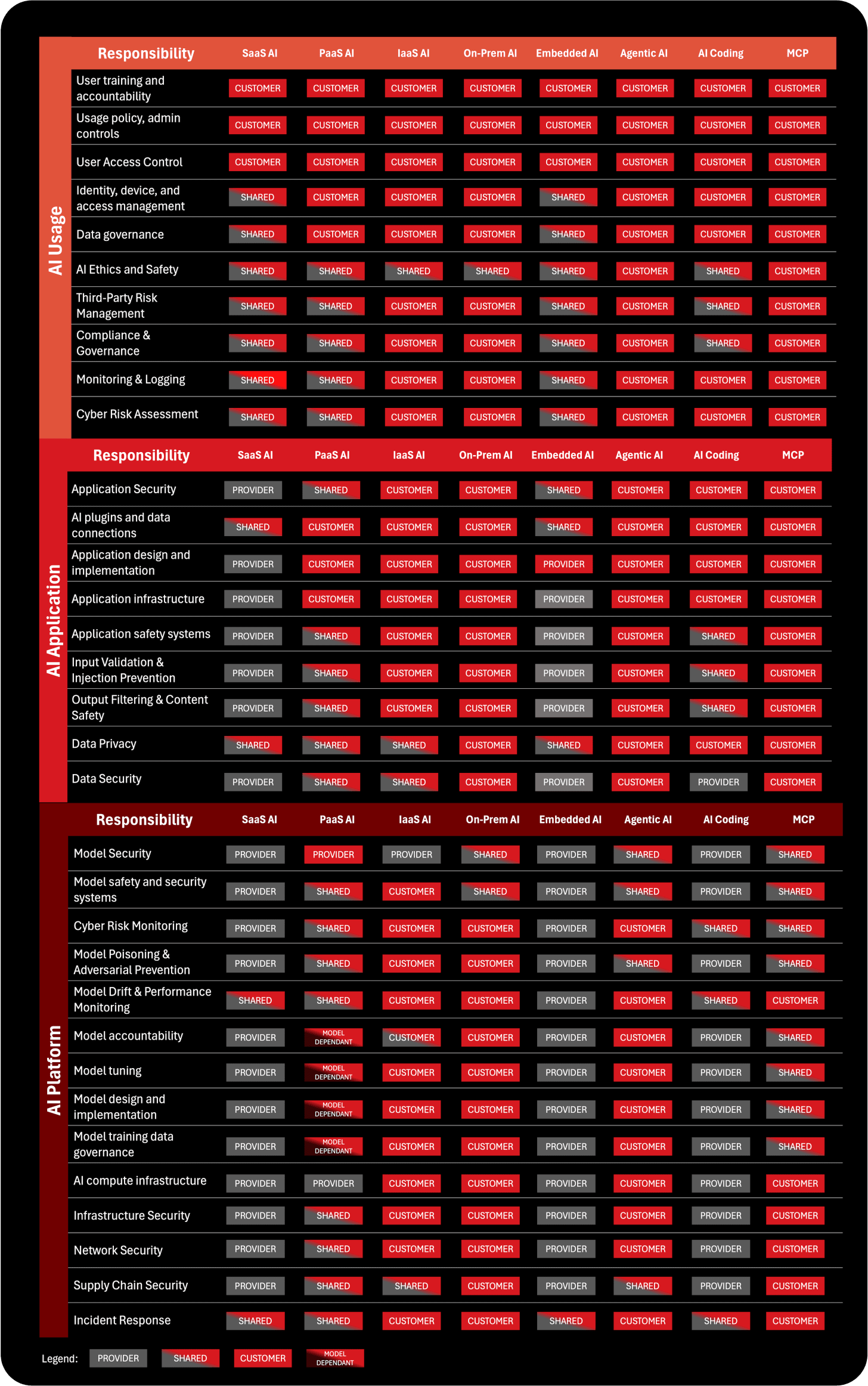

This model was created to help decision makers, including CEOs, CISOs, CIOs, CROs, data officers, risk managers, and procurement leaders, understand the shifting boundaries of responsibility across the AI lifecycle. It outlines 33 distinct responsibility areas organized within three categories: AI Usage, AI Application, and AI Platform. These responsibilities cover everything from user training and access control to adversarial protection, model security, safety systems, data privacy, supply chain risk, and incident response. By clearly defining these responsibilities, organizations can evaluate AI vendors more effectively, negotiate contracts and SLAs with greater precision, and align internal teams around accurate risk expectations. DOI: 10.13140/RG.2.2.24062.65605

Understanding the Three Layers of the AI Shared Responsibility Model

The AI Shared Responsibility Model organizes responsibilities into three interconnected layers: AI Usage, AI Application, and AI Platform. These layers reflect how AI is actually adopted inside organizations, from human interaction, to business workflow design, down to the technical core of models and infrastructure. By separating AI into these layers, leaders can pinpoint where responsibilities sit, where risks emerge, and which teams must be accountable. This structure also makes it possible to compare AI deployments consistently across service types, vendors, and use cases.

Understanding these three layers gives leaders a structured way to analyze any AI deployment. Instead of vague questions like “Is this AI secure?” or “Is the provider responsible?”, the model shifts the conversation to:

How are users governed and trained? (Usage)

How is the AI integrated into our systems? (Application)

Who manages the model, data, and infrastructure? (Platform)

This clarity is what allows boards, executives, CISOs, and risk leaders to assign accountability, define governance, negotiate contracts, and recognize where risks actually sit. Below is an expanded explanation of each layer:

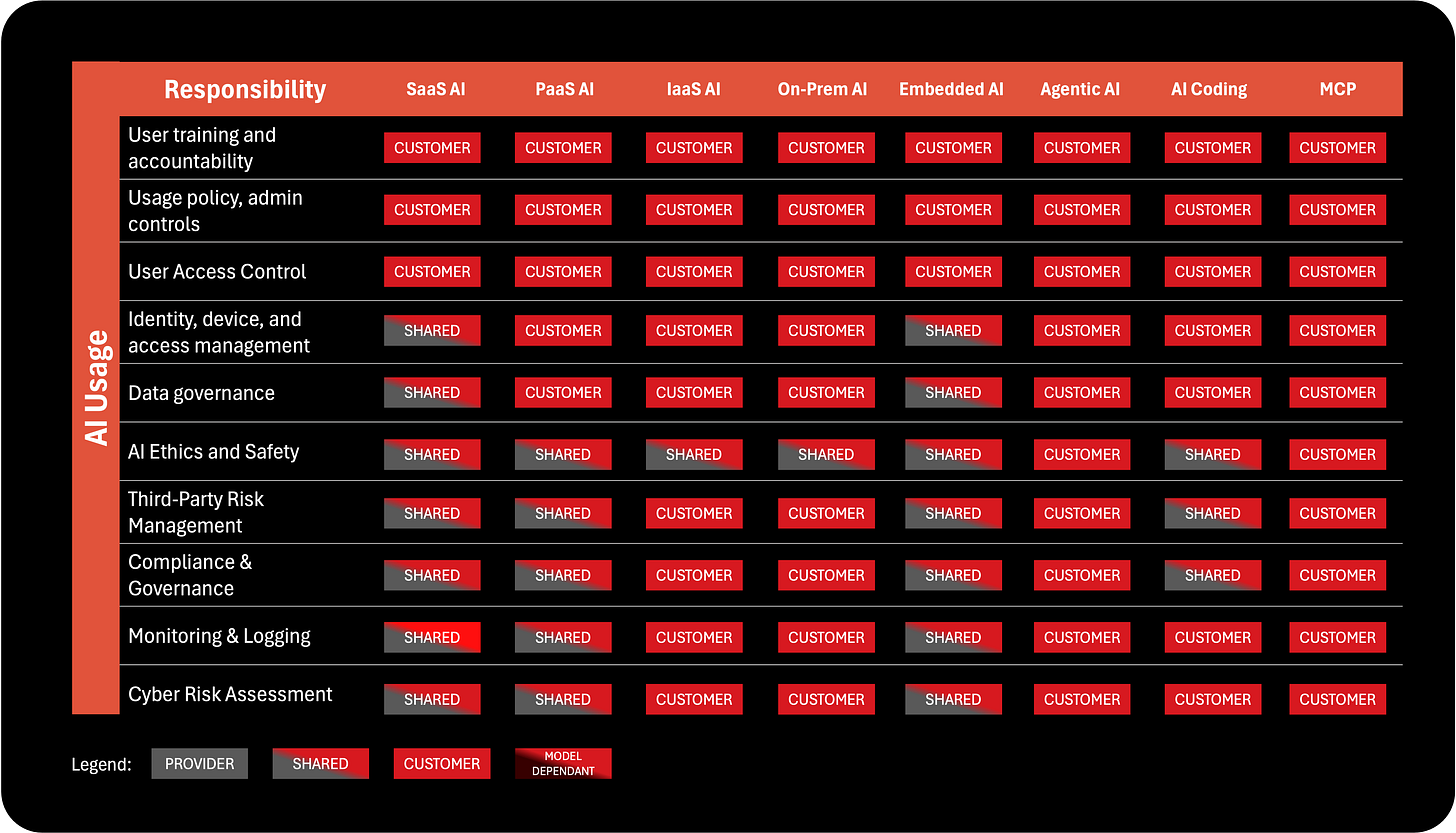

1. AI Usage Layer: Governance, People, and Responsible Use

The AI Usage layer is the top of the stack because it represents what every organization must own regardless of where the AI runs, who built it, or which vendor provides it. This layer is about people, governance, behavior, and policy, the elements that technology providers cannot control. Even the most advanced AI model cannot prevent a user from pasting sensitive data into a prompt or misinterpreting an output. That is why this layer includes responsibilities such as user training, access control, data governance, ethical use policies, third-party oversight, and accountability mechanisms.

In practice, this layer decides whether AI becomes a strategic asset or an unmanaged liability. A tool like ChatGPT or Copilot may come with strong security from the provider, but the organization must still ensure that employees do not misuse it, expose sensitive data, bypass approval processes, or make decisions without oversight. The Usage layer is where boards define guardrails, CISOs establish policy, HR and Legal set ethical boundaries, and business units adopt workflows that encourage safe, responsible use. It is the foundation of AI governance, and it always remains in the customer’s hands.

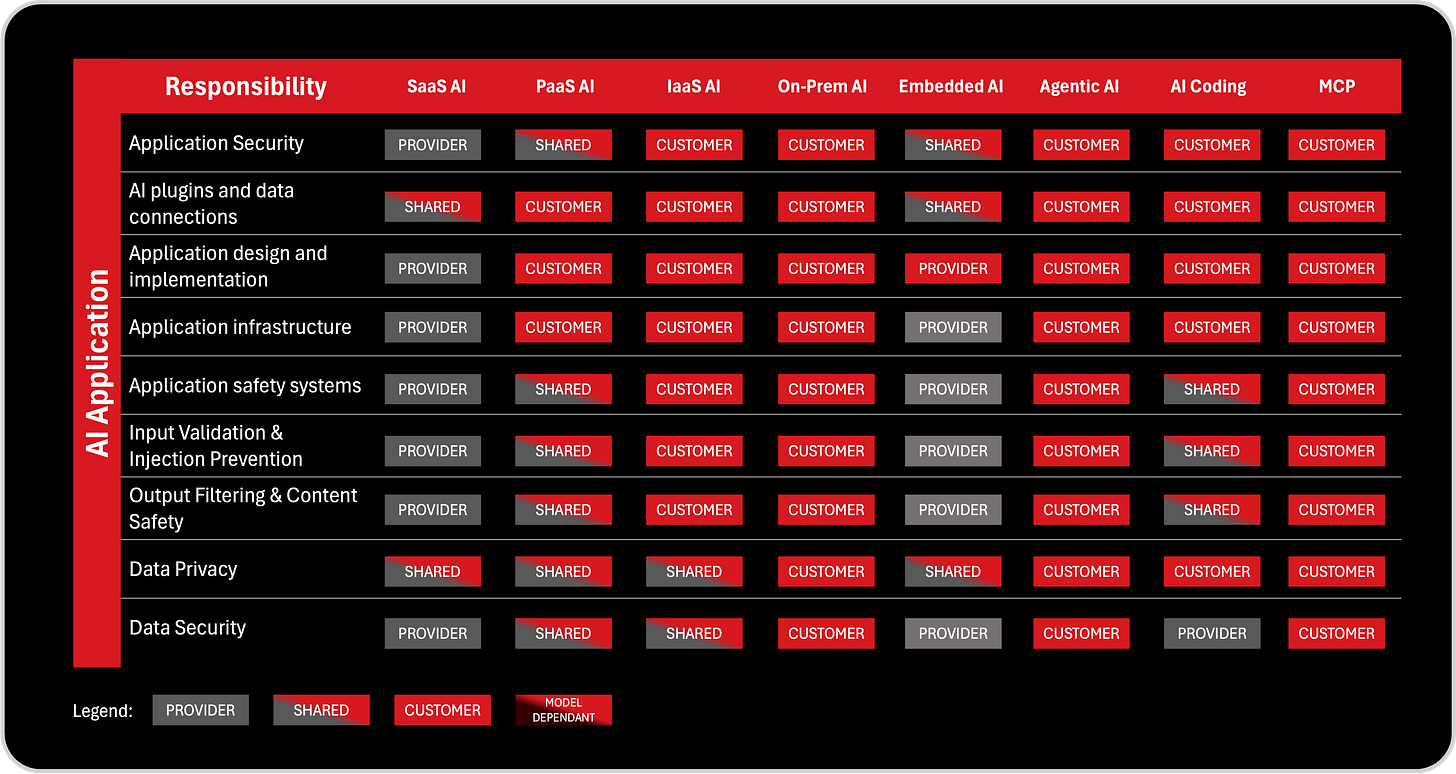

2. AI Application Layer: How AI Is Built Into Workflows and Systems

The AI Application layer is where technology meets business processes. It covers how AI is integrated into applications, workflows, tools, and customer-facing systems. Even if an organization does not build its own models, it still designs how those models are used, which plugins they access, what data flows through them, what decisions they support, and which systems they can modify or interact with. This layer includes responsibilities such as input validation, output filtering, plugin and integration management, application security, infrastructure supporting AI apps, and privacy controls.

Most real-world AI failures occur at this layer because organizations often underestimate how risky AI becomes when connected to internal systems. A harmless chatbot becomes dangerous the moment it can query a database or run an API call. A productivity tool becomes a risk vector when it gains access to internal documents or email. This is also the layer where design choices, such as chaining multiple AI tools, using retrieval systems, or enabling code execution, dramatically influence risk. In short, the Application layer governs how AI behaves, where it interacts, and how it fits into business logic. Responsibilities here are often shared, but customers retain ultimate accountability for how AI is deployed inside their ecosystem.

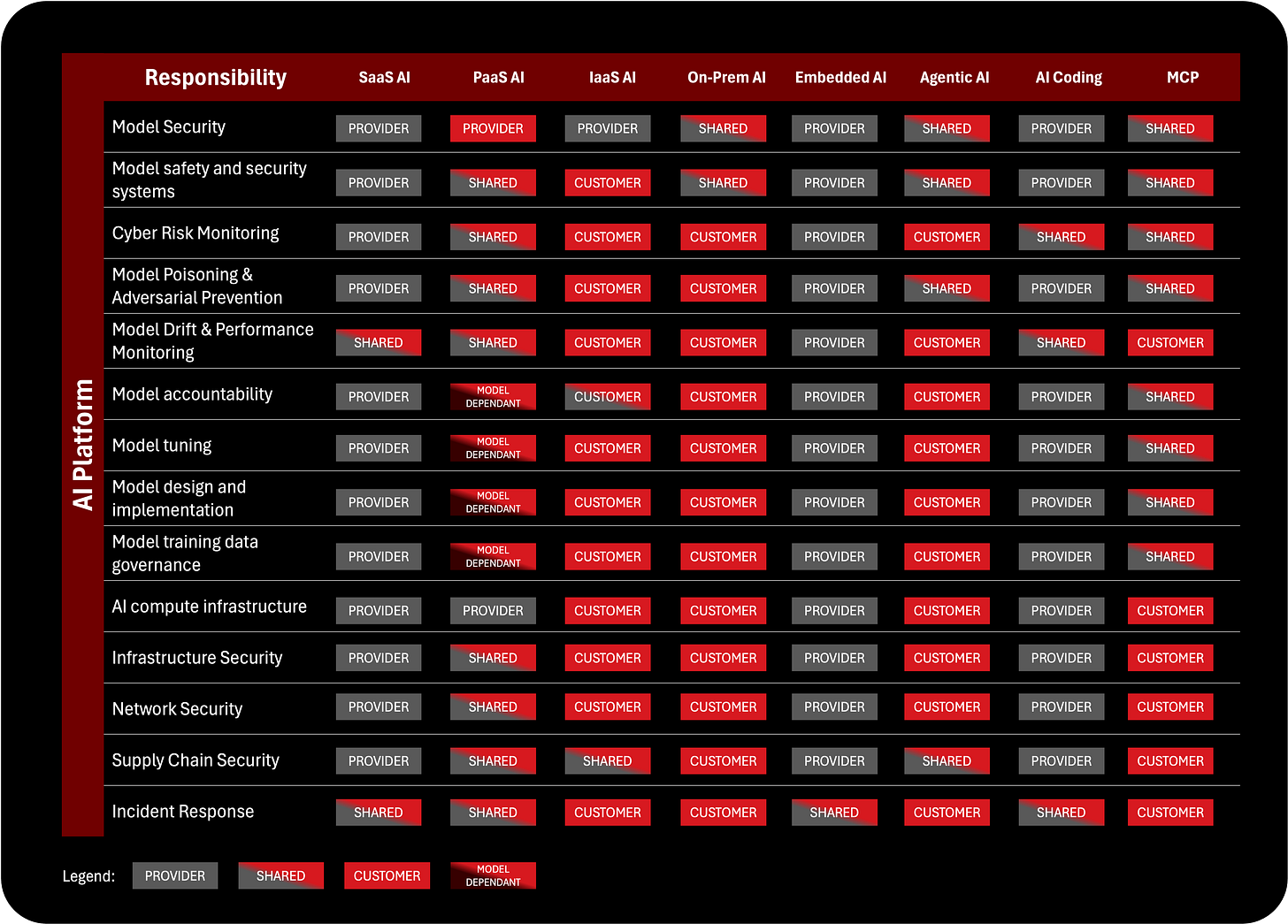

3. AI Platform Layer: Models, Training, Infrastructure, and Security

At the lowest level is the AI Platform layer, which includes the model itself, the training process, the evaluation pipeline, the underlying compute infrastructure, and the deep technical security controls required to keep AI systems reliable and safe. Responsibilities at this layer include model security, adversarial defenses, poisoning prevention, drift monitoring, training data governance, compute infrastructure, network security, and incident response for AI-specific failures. This is the layer where cloud providers, model developers, data scientists, and ML engineers typically operate.

Responsibilities here vary dramatically depending on the service type. In SaaS AI, the provider owns almost everything in the Platform layer. In PaaS AI, responsibilities are shared, especially when customers fine-tune or bring their own models. In IaaS or on-premises deployments, the customer owns nearly all platform responsibilities, from maintaining GPU clusters to securing training pipelines. This layer is where the most technical risks live: compromised training data, misaligned models, drifting behaviors, vulnerable dependencies, exposed weights, and resource misuse. While this layer may feel distant to executives, it is critical because platform failures can lead to unpredictable, unsafe, or harmful AI behavior, especially when the model interacts with real systems or takes autonomous actions.

Framework Origins and Inspiration

This is not an invention, nor an attempt to replace the excellent work already done in the industry. Instead, I built this model inspired by several existing cloud and AI shared responsibility frameworks, combining their strongest elements to fill a very real gap I kept encountering in board discussions, executive meetings, and strategic risk conversations. Over the years, I noticed that organizations had access to many frameworks, cloud security matrices, AI responsibility guidelines, governance checklists, yet leadership teams still struggled to translate them into meaningful decisions. They had diagrams, but not clarity; they had models, but not the language to ask the right questions during procurement, risk assessment, and vendor negotiations. What was missing was a unified view that bridged the technical foundations of AI security with the governance, accountability, and strategic concerns that executives and boards must handle. This model was built precisely to close that gap.

Cloud Shared Responsibility Foundations:

AWS Shared Responsibility Model - Amazon Web Services established the foundational shared responsibility framework for cloud computing, clearly delineating “security of the cloud” (provider) versus “security in the cloud” (customer).

Microsoft Azure Shared Responsibility - Microsoft’s framework refined the shared responsibility model across IaaS, PaaS, and SaaS, providing clarity on how responsibilities shift with service models.

Google Cloud Shared Responsibility and Shared Fate - Google introduced the concept of ‘shared fate’ alongside shared responsibility, emphasizing that provider and customer success are intertwined.

Oracle Cloud Infrastructure Shared Responsibility - Oracle’s framework provides detailed operational and security responsibility matrices across different service types.

Cloud Security Alliance (CSA) - The CSA has been instrumental in defining best practices and frameworks for cloud security shared responsibility across the industry.

PCI Security Standards Council - Cloud Computing Guidelines - The PCI DSS v2 Cloud Guidelines established compliance-focused shared responsibility models critical for regulated industries.

AI-Specific Shared Responsibility Models:

Microsoft’s AI Shared Responsibility Model - Microsoft’s framework was the first to explicitly adapt the shared responsibility model for AI systems, establishing foundational concepts for dividing responsibilities across AI usage, AI application, and AI platform layers in cloud AI services. Their model provided the initial structure for understanding how responsibilities shift between SaaS AI, PaaS AI, and IaaS AI deployments.

Mike Privette’s AI Security Shared Responsibility Model - This community-driven framework expanded the responsibility model with a comprehensive security-focused perspective, identifying specific security domains and controls relevant to AI systems. Privette’s work was groundbreaking in highlighting AI-specific threats like adversarial attacks, model poisoning, prompt injection, and the need for specialized security controls that don’t exist in traditional cloud computing.

Why This New Model Needed to Emerge

Drawing inspiration from cloud and AI models was not enough. The gap was not in technical understanding, it was in executive alignment. Organizations needed a model that:

Works in a board meeting, not just a security workshop

Helps CISOs explain risk to CFOs, CROs, CEOs, and general counsel

Supports vendor assessments, procurement decisions, and SLAs

Translates AI complexity into clear responsibility boundaries

Reflects eight AI service models, not just the traditional cloud three

Identifies 33 distinct responsibilities across usage, application, and platform layers

Helps leaders make decisions before incidents, not after

This model is intentionally built as a fusion: Cloud discipline + AI-specific risk + enterprise governance + real-world experience from hundreds of executive conversations.

It exists because organizations needed a responsibility model that fits how real AI adoption happens messy, decentralized, fast-moving, and increasingly tied to strategic decisions, competitive advantage, and legal exposure.

Eight AI Service Types - Explanation with Real Use Cases

Understanding AI risk requires first understanding what kind of AI you are actually consuming. The biggest mistake I see in boardrooms and executive meetings is treating all AI systems as if they were the same. They are not. A model running as a SaaS tool behaves differently from one embedded in a CRM platform, and drastically differently from an autonomous agent capable of acting on behalf of a user. Each service type brings its own operational realities, governance requirements, and security responsibilities. Below is a comprehensive, narrative-based exploration of the eight AI service types in the AI Shared Responsibility Model, showing why this differentiation matters and how it directly affects your organization’s risk posture.

1. SaaS AI - Fully Managed AI Services

SaaS AI represents the most familiar and accessible category: fully managed AI applications such as ChatGPT Enterprise, Google Gemini, Claude, Midjourney, Otter.ai, Notion AI, or Perplexity Enterprise. These services abstract away the complexity of model training, infrastructure, patching, and platform-level security controls. For business leaders, SaaS AI is appealing because it provides instant value without requiring internal AI expertise or significant engineering investment. However, the biggest misconception about SaaS AI is believing that because the provider manages the technology, the organization no longer bears meaningful security responsibility. In reality, the provider handles the technical layers, but the customer remains responsible for governance, user training, access control, ethical usage, and data exposure prevention.

Imagine a healthcare company where employees begin using a SaaS AI tool to summarize emails and rewrite patient communication templates. Even if the vendor guarantees that prompts are not used for training, the organization is still responsible for ensuring that doctors and staff do not paste personally identifiable health information into the AI system. Similarly, legal firms must prevent the disclosure of privileged information, financial institutions must avoid exposing regulated data, and public-sector organizations must comply with strict information-handling laws. SaaS AI accelerates work - but without clear governance, it can also accelerate data leakage and compliance failures. The risks are behavioral rather than technical, making this service type as much a policy challenge as a technology one.

2. PaaS AI - I Platforms for Customer-Built Applications

PaaS AI sits at the intersection of flexibility and shared responsibility. Services like Azure AI, AWS Bedrock, Google Vertex AI, and IBM watsonx provide pre-trained models, model APIs, vector databases, and orchestration frameworks that customers can use to build their own AI-powered applications. While the provider secures the underlying model hosting environment and platform-level controls, the customer becomes responsible for everything built on top of it: workflow design, business logic, integrations, plugins, data flows, identity configuration, and application-level security. This is where many organizations encounter their first real AI security challenges, because PaaS AI enables deep customization - and therefore creates surface area for misconfiguration.

Consider a retail enterprise building a custom product-recommendation chatbot using Bedrock. The platform is secure, but the development team inadvertently connects the model to internal inventory systems without proper rate-limiting, meaning a compromised identity or malicious prompt could extract sensitive product or supplier information. Or imagine a developer enabling a plugin that allows the model to run SQL queries, but forgetting to enforce row-level security. A simple prompt injection attack could expose customer purchases or loyalty program data. These failures are not the provider’s responsibility - they arise from application design choices made by the customer. PaaS AI is powerful and transformative, but it demands rigorous architecture reviews, secure coding practices, identity governance, and continuous monitoring. It is a shared responsibility zone, where both sides must uphold their part.

3. IaaS AI - Customer-Deployed Models on Compute Infrastructure

IaaS AI is the model for organizations that want full control: deploying custom models on GPU clusters, cloud compute instances, Kubernetes clusters, or on-premise servers. Here, the provider (AWS, Azure, Google Cloud, etc.) is responsible for the physical infrastructure, but everything else is owned entirely by the customer: model deployment, tuning, data handling, encryption, storage, networking configuration, patching, and runtime security. This is the AI equivalent of managing virtual machines in the early cloud era, full flexibility, but also full responsibility.

A real example: a fintech company trains a proprietary fraud-detection model using GPU clusters on AWS EC2. They own the entire ML pipeline, data ingestion, feature engineering, training, validation, monitoring, and deployment. But they also own the risks: if training data includes improperly anonymized customer transactions, the compliance failure is theirs. If the model container contains vulnerable libraries, it is their obligation to patch. If adversarial inputs bypass controls, they must implement detection. IaaS AI is ideal for organizations with strong ML teams and rigorous security practices, but for most enterprises, it introduces risks that can quickly overwhelm existing capabilities. It is the most resource-intensive model in this spectrum.

4. On-Premises AI - Full Control, Full Accountability

On-premises AI is chosen by organizations that cannot allow sensitive data, models, or workloads to leave their environment due to sovereignty, regulatory, or competitive reasons. This includes governments, intelligence agencies, research institutions, defense contractors, critical infrastructure providers, and highly regulated sectors such as medicine and finance. On-prem AI requires organizations to procure hardware (often specialized GPU servers), deploy models locally, manage ML pipelines, enforce physical and network security, and build the entire ecosystem internally.

Imagine a national bank that must keep transaction data inside the country for regulatory compliance. They deploy a commercial LLM on-premises, fine-tune it with internal data, and serve it to thousands of employees. They must now secure the model against prompt injection, ensure proper isolation between workloads, implement red-teaming, manage patches, maintain GPU clusters, and enforce strict access policies. If the model drifts, misbehaves, or fails, the responsibility is entirely on the organization. On-premises AI maximizes control and minimizes vendor dependency, but demands a level of operational maturity that only the most advanced organizations possess.

5. Embedded AI: AI Features Integrated into Enterprise Applications

Embedded AI is becoming one of the most pervasive service types. Tools like Microsoft Copilot, Salesforce Einstein, Adobe Firefly, and GitHub Copilot embed AI directly inside productivity suites, CRMs, ERPs, collaboration apps, and development environments. These AI capabilities operate within an existing application ecosystem, which means security responsibilities are shared in subtle but important ways. The provider secures the embedded AI component, but the customer remains responsible for how users interact with the AI inside their business processes.

A typical scenario: a sales team uses Salesforce Einstein to generate deal summaries based on CRM data. The model is safe within Salesforce’s environment, but if a poorly trained employee triggers actions that alter CRM fields or accidentally exposes customer data in generated reports, the missteps fall on the organization. Or consider GitHub Copilot inside a development pipeline: while the tool generates code, it is the development team’s responsibility to validate, review, test, and secure the generated code before it reaches production. Embedded AI blends into daily workflows invisibly, making governance crucial because employees often do not realize where AI ends and where the application begins.

6. Agentic AI: Autonomous AI Capable of Taking Actions

Agentic AI is the most disruptive, and the most misunderstood, category. These systems do not just generate text or suggestions; they can access tools, call APIs, perform actions, modify systems, schedule workflows, trigger transactions, update configurations, or even operate semi-independently within an environment. When an AI becomes capable of acting, not just advising, risk multiplies. Responsibility shifts dramatically toward the customer, because autonomous systems require supervision, containment boundaries, ethical safeguards, escalation paths, and real-time monitoring.

Imagine an enterprise deploying an internal “AI employee” agent that can generate reports, query databases, run scripts, send emails, and open tickets. If that agent receives a malicious prompt or interacts with an infected system, it could inadvertently exfiltrate data, delete files, trigger incorrect actions, or propagate errors. Or consider an AI cybersecurity agent tasked with automatically responding to alerts. If misconfigured, it could isolate critical systems, disable legitimate services, or mislabel internal traffic as malicious. Agentic AI introduces operational, ethical, and safety risks that require dedicated oversight functions. This is the service type where governance maturity matters most.

7. AI Coding: Code Generation and Development Acceleration

AI code assistants such as GitHub Copilot, Amazon CodeWhisperer, Replit AI, or JetBrains AI are reshaping software development. They accelerate coding, reduce boilerplate, and help junior developers learn faster. But the moment AI begins generating production code, security responsibility becomes a core concern. Providers generate code; customers must validate it, ensure it is secure, scan it, review it, integrate it responsibly, and maintain it.

Consider a developer generating microservices using Copilot. The assistant may produce outdated encryption patterns, missing error handling, insecure dependency versions, or logic flaws. If the code passes into production without review, the resulting vulnerabilities are the organization’s liability. AI coding tools can also inadvertently generate code snippets influenced by open-source projects with non-compliant licenses. This creates risk for intellectual property and commercial distribution. AI coding accelerates development, but the responsibility for correctness, security, and compliance remains 100% with the customer.

8. MCP (Model Context Protocol): Secure Model-to-Tool Communication

MCP, the Model Context Protocol, is an emerging architecture that allows AI models to securely interact with external tools, APIs, services, and data sources. It essentially creates a standardized protocol for connecting AI models with enterprise systems. Because MCP enables models to execute actions, retrieve data, and control tools, responsibility becomes heavily shared. Providers define the protocol and security primitives, but customers define which tools connect, what permissions they receive, and how context is exchanged.

Imagine an enterprise implementing MCP to allow an internal LLM to safely access a financial database, HR system, customer service tools, and document repositories. The protocol itself is secure, but the organization must control permissions, enforce audit logging, manage identities, define scopes, and ensure that sensitive systems are not inadvertently exposed. A misconfigured MCP endpoint could allow an LLM to retrieve more data than intended or to perform operations beyond its role. MCP is powerful, but it requires architectural discipline.

How to Use This Framework in AI Conversations: Asking the Right Questions

A framework is only valuable if leaders know how to apply it during real discussions. The true power of the AI Shared Responsibility Model is not in its diagrams or matrices, but in how it reshapes conversations inside the organization. This model gives executives a structured way to interrogate new AI proposals, evaluate vendors, challenge assumptions, and expose hidden risks before they materialize. It transforms ambiguous discussions, “Is this AI safe?” “Have we checked compliance?” into concrete, guided conversations about responsibility boundaries. Instead of debating opinions or relying on intuition, leaders can now walk through the model’s categories and ask targeted, high-impact questions that reveal gaps, dependencies, and unspoken assumptions.

When a team proposes adopting a new AI solution, whether it’s an internal chatbot, a SaaS AI service, a custom PaaS application, or an agentic system with autonomous capabilities, the conversation should begin with clarifying which of the eight AI service types the solution belongs to. Naming the service type immediately unlocks the correct questions. For example, SaaS AI triggers governance and data protection questions; PaaS AI prompts questions about design, plugins, and integration risks; agentic AI demands deep exploration of oversight, fail-safes, and ethical boundaries. Without this classification step, organizations often talk past each other, assuming they are discussing the same kind of AI when they are not. Identifying the service type aligns everyone on the operational reality and frames the rest of the conversation.

Once the service type is clear, the next step is navigating the three responsibility categories, AI Usage, AI Application, and AI Platform, and asking targeted questions within each. Leadership discussions should start with AI Usage, because this category represents the responsibilities that always remain with the organization, regardless of deployment model. Meaningful questions here include:

Who can access this AI?

What training have we given them? What data will they use?

Do we have usage policies?

Have we defined what is allowed versus prohibited?

How will we enforce accountability?

These questions often reveal that governance is missing, assumptions are untested, or the organization has unintentionally placed trust in user behavior rather than controlled processes. These conversations are especially important at board level, where misuse and misalignment carry reputational and regulatory consequences.

The conversation then moves into the AI Application layer. This is where questions uncover how AI is integrated into business processes and where many practical risks reside. Leaders should ask:

How is this AI being embedded into our workflows?

What systems does it connect to?

Are we validating inputs and filtering outputs?

Are plugins or tools creating new attack vectors?

How do we prevent prompt injection?

Who is responsible for output review and escalation?

These questions expose whether the team has considered operational guardrails, safety systems, and secure design practices. For vendor discussions, this category also reveals whether the provider has implemented sufficient application-level controls or whether additional responsibilities fall on the customer.

Finally, the AI Platform category elevates the conversation to deeper technical responsibilities, but in a way that remains accessible to non-technical leaders. Executives can ask:

Who secures the model?

Who monitors drift?

How do we detect adversarial behavior?

Who red-teams the model?

How is training data governed?

What happens if the model behaves unpredictably?

What is the incident response plan?

These questions prompt clarity about where provider obligations end and where customer responsibilities begin. They also ensure that the organization does not overestimate the provider’s role or underestimate the complexity of model security, monitoring, and lifecycle management.

Using this framework also improves vendor conversations and procurement negotiations. Teams can bring the model directly into vendor meetings and walk through each responsibility area, asking:

Which of these do you own?

Which do we own?

What controls do you provide?

What evidence can you show?

Where are the shared boundaries?

How do you handle incidents?

What are your limitations?

What do you require from us?

This approach prevents misunderstandings, vague promises, or assumptions that “the provider handles it.” It also leads to more robust contracts, clearer SLAs, and more realistic implementation plans.

At the board and executive level, the model strengthens oversight by providing a structured way to challenge proposals without having to be an AI expert. Boards can ask management:

Which of the eight service types are we using?

Have we mapped responsibilities across the 33 areas?

Where are the shared responsibilities and how are we managing them?

What risks have we accepted?

How does this align with our risk appetite?

What additional controls, resources, or governance do we need?

These questions drive accountability and ensure alignment between operational plans and strategic risk tolerance. Most importantly, this framework turns AI conversations from abstract discussions into focused, actionable dialogues grounded in responsibility and governance. It gives leaders a common vocabulary, a shared mental model, and a set of guiding questions that bring clarity to complex AI decisions. The goal is not to provide all the answers, but to ensure the organization is always asking the right questions, the questions that ultimately determine whether AI becomes a competitive advantage or an unmanaged liability.

Next Steps

Artificial Intelligence is accelerating faster than governance structures, risk frameworks, and executive decision-making models can keep pace. In this environment, organizations cannot rely on intuition, assumptions, or traditional IT thinking to assess responsibility. AI brings new attack surfaces, new operational dependencies, new ethical questions, and entirely new categories of failure. Yet the most consistent gap I see, across industries, across regions, across maturity levels, is not technological. It is clarity. Leaders know they need AI. They know it brings opportunity and risk. But they do not always know the right questions to ask, the right boundaries to define, or the right internal owners to assign. This is where the AI Shared Responsibility Model provides real value: it creates structure where ambiguity exists, and alignment where confusion persists.

By clearly defining eight distinct AI service types and mapping 33 responsibility areas across usage, application, and platform layers, the model gives organizations a way to translate complexity into actionable insight. It exposes who owns what, where risks accumulate, and how responsibilities shift depending on architecture. It reveals blind spots, especially in governance, access control, data handling, safety systems, and model oversight, that would otherwise remain hidden until an incident occurs. Most importantly, it enables executives, boards, CISOs, and risk leaders to speak a common language. Instead of abstract debates about “AI risk,” the conversation becomes concrete: Which service type are we adopting? Which responsibilities are ours? Which belong to the provider? What is shared? What is unclear? What must be negotiated? This clarity is essential for effective decision-making.

Ultimately, this framework is not just a map of responsibilities; it is a tool for better leadership. It empowers organizations to adopt AI with confidence, grounded in real governance rather than assumptions. It strengthens vendor negotiations, improves procurement outcomes, guides internal planning, and supports the level of oversight boards are now expected to provide. It ensures that as AI becomes embedded in every process, system, and decision, organizations remain anchored in responsibility and accountability. The lesson from cloud security still holds, responsibility can shift, but it is never fully offloaded. In the AI era, this principle is even more urgent. Organizations that embrace this mindset will not only protect themselves more effectively; they will unlock the full value of AI with clarity, discipline, and strategic intent.