The Unintentional Attack: Learning from the AWS and Microsoft Outages

Lessons in Cyber Risk Management and Cyber Resilience

The past two weeks offered a quiet yet powerful reminder of how fragile our digital foundations have become. In a world where nearly every business function depends on the cloud, a single misstep can ripple across continents, halting operations and testing the resilience of systems we once believed unshakable.

Two of the world’s largest cloud providers, AWS and Microsoft, suffered massive global outages within the span of a single week.

The first came in mid-October 2025, when an automation and DNS misconfiguration cascaded through critical systems, disrupting thousands of dependent services across the globe. Then, just days later, we experienced the second collapse, when an “inadvertent configuration change” in Azure Front Door brought down Microsoft 365, Azure, and Xbox Live across multiple continents.

There was no ransomware, no zero-day, no nation-state adversary lurking in the shadows.

Only a few misplaced commands, human and automated, buried deep within the machinery of the cloud. This was not an attack in the traditional sense. There was no adversary, no exploit, no intent. And yet, the outcome mirrored what a sophisticated threat actor could have achieved deliberately.

It was, in every practical sense, an unintentional attack, one launched by the very systems we built to protect ourselves.

These incidents didn’t just interrupt services; they revealed something deeper about the nature of cyber risk itself. They showed that the same systems we build to scale and protect our digital lives can, under the right (or wrong) conditions, collapse under their own weight.

They reminded us that in modern cybersecurity and cyber resilience, intent is optional, impact is what matters.

When the system breaks itself

What makes these incidents so profound is not only their scale but their symbolism. Both began as simple operational adjustments, commands entered to optimize, patch, or fine-tune, and both cascaded into widespread digital paralysis. Users were locked out of email, cloud workloads froze, services failed, and the very backbone of digital business bent under its own complexity.

Technically, these weren’t breaches. There was no attacker, no malicious payload, no infiltration.

But in the language of cyber risk, they absolutely qualify as security events. A vulnerability was triggered, an environment became unstable, and the consequences were systemic.

To understand their significance, it’s useful to return to one of the oldest and simplest principles in cybersecurity, the CIA Triad: Confidentiality, Integrity, and Availability.

Most organizations design their defenses around the first two. We encrypt data to protect confidentiality. We implement change control and monitoring to preserve integrity. But the third pillar, availability, is often taken for granted, until it disappears.

These outages didn’t compromise confidentiality or integrity. No data was stolen. No records were altered. But they struck directly at availability, the lifeblood of every digital service and the foundation upon which trust in the cloud is built.

Because when systems go dark, it doesn’t matter whether it was caused by a nation-state or a mistyped command, the business impact is the same.

In that moment, we rediscover a forgotten truth: the absence of an attacker does not equal the presence of security. Availability is not just a technical metric; it’s the heartbeat of digital resilience. And when it stops, the organization feels it everywhere, from the SOC to the boardroom.

These events didn’t happen because someone was attacking the system; they happened because the system broke itself. And that distinction changes everything.

In a connected world where automation moves faster than human oversight, intent becomes irrelevant. Whether the disruption comes from a malicious actor or a misconfigured setting, the impact, the loss of availability, the erosion of trust, the paralysis of operations, is the same.

The Hidden Face of Vulnerability

Back in May 2025, I wrote “The Hidden Vulnerability: Why Overlooking Security Control Configurations Undermines Your Cyber Risk Assessment.” In that piece, I argued that misconfiguration is one of the biggest yet least acknowledged vulnerabilities in cybersecurity, a weakness so common, so ordinary, that it hides in plain sight. Five months later, two massive outages have proven how right, and how dangerous, that observation truly was. These incidents were not the result of advanced exploits or zero-days; they were the consequence of simple, human-triggered misconfigurations amplified by scale, automation, and dependency.

We often define cyber risk through the familiar equation: Threat × Vulnerability × Consequence.

Yet, as I wrote then, our collective gaze too often remains fixed on the first term, the threat. We chase adversaries, hunt for indicators of compromise, and measure our readiness in the language of detection. But we rarely pause to examine our own configurations, the human decisions, inherited defaults, and invisible assumptions that quietly define our exposure every day.

A misconfiguration doesn’t announce itself. It doesn’t carry a CVE or severity score. It’s not published in a threat feed or mentioned in an advisory. It simply exists, a permission that’s too broad, an exclusion that’s too generous, a policy that wasn’t validated after deployment.

It’s a vulnerability created by design, not by attack.

It wasn’t because their defense-in-depth failed, but because an automated process executed exactly as configured, just not as intended. It wasn’t because of a compromised perimeter, but because a configuration change rippled through a vast digital ecosystem faster than it could be reversed. These events remind us that exposure isn’t always malicious, it’s often mechanical. Every new workload, every API, every container, every automation adds not just capability but potential misalignment. And in environments built for speed, even minor drift becomes major risk.

That’s why exposure management and configuration awareness are not administrative tasks; they are the nervous system of modern cybersecurity.

Because cyber risk doesn’t begin with a breach, it begins with a decision. And sometimes, that decision is nothing more than one misconfiguration away from chaos.

When Resilience Becomes the Last Perimeter

These outages were not failures of detection. They were failures of resilience.

We often talk about cybersecurity as a battle of speed and intelligence, detecting the attacker before they strike. But these events remind us that sometimes, the attacker doesn’t exist. Sometimes, it’s the infrastructure itself that falters, and resilience becomes our last line of defense. The truth is simple yet uncomfortable: our systems have outgrown our ability to fully comprehend them. They are complex, distributed, and dependent on a thousand invisible links that can break in silence.

Automation gives us scale but strips away the warning signs. A single misconfiguration, amplified by automation, can propagate faster than any SOC alert, any analyst triage, or any manual intervention can react.

This is why cyber resilience, the ability to absorb, adapt, and recover, has become the new perimeter. It’s not only about detecting malicious activity, but ensuring that human mistakes, technical drift, or process errors don’t cascade into global paralysis. In a hyper-connected world, resilience is not a luxury. It is the only defense left when prevention and detection both fail.

The Mirror of Intent

When I look at these outages through the lens of cyber risk, I can’t help but think about the fragility of intent. If an accidental misconfiguration can bring down global systems, what could a malicious actor with the same privileges achieve, deliberately?

Intent changes the narrative, but not the consequence.

That’s what makes configuration risk so insidious. It blurs the line between human error and malicious intent, between what we meant to do and what actually happens. It exists at the intersection of technology, process, and human behavior. It is the bridge between the predictable and the unexpected, where a single trusted action can have untrusted outcomes.

Imagine disabling multi-factor authentication during a test and forgetting to re-enable it. In that moment, nothing seems wrong — the system behaves as expected, automation proceeds, operations continue. But beneath that surface, you’ve just created an open path that an attacker could exploit, or worse, an internal process could misuse. One forgotten configuration, one missing control, one unchecked assumption, that’s all it takes for security to become fragility.

The same automation that accelerates business transformation can, in the wrong context, accelerate its collapse. And it’s here that the boundaries between cybersecurity, reliability engineering, and operational governance begin to dissolve. They are no longer separate disciplines, but interdependent elements of a single objective: cyber resilience. Every misconfiguration must be treated as both an operational failure and a security event, because in the end, they lead to the same place, disruption, loss, and erosion of trust.

The difference is not in what happened, but in whether we were prepared to recognize that the threat can also come from within.

Why I Created the Cybersecurity Compass

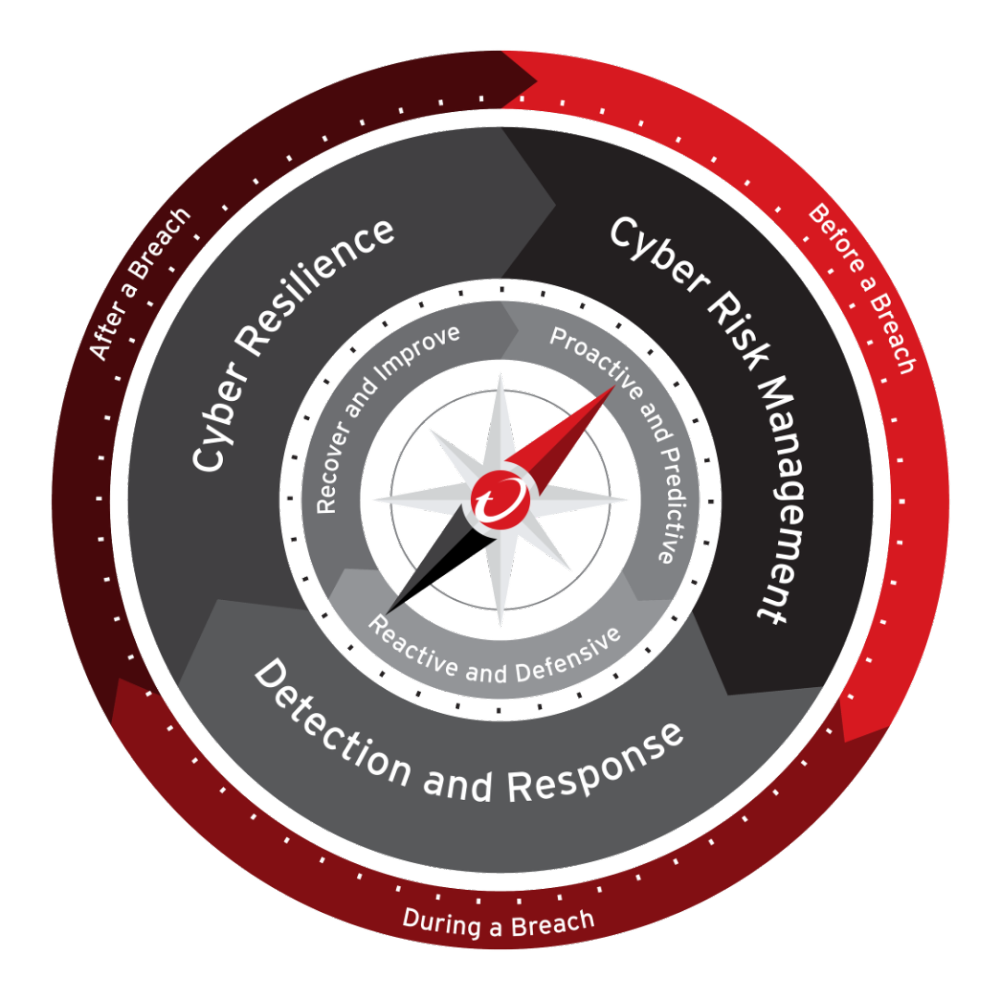

When I first designed the Cybersecurity Compass, I wanted to challenge a deeply rooted assumption, that cybersecurity follows a straight line. For decades, we’ve been trained to think in stages: prevention before the breach, detection during the breach, and recovery after. It’s a comforting narrative, linear and orderly. But the digital world we live in today doesn’t move in straight lines anymore.

Modern infrastructures are fluid and unpredictable. Cloud workloads appear and disappear in seconds. Identities multiply across platforms. Dependencies stretch across geographies and third-party code. And automation, designed to simplify, has instead multiplied the speed and scale of change. In this environment, cyber risk is no longer defined by the attacker at the gate, but by the choices made within the walls, by how we configure, connect, and maintain the systems that keep our world running.

That’s why those outages struck me so deeply. In both cases, the threat wasn’t a cybercriminal group or a nation-state actor. There was no exploit, no malware, no orchestrated campaign. The threat was internal, a misconfiguration, a lapse in governance, a decision made without the right context or control.

In other words, the threat was preventable.

When I saw these incidents unfold, I realized how clearly they illustrated the message behind the Compass. The Compass wasn’t created to describe attacks, it was created to describe movement. It reflects the way organizations must constantly shift between proactive and predictive risk management, reactive and defensive response, and recovery and improvement in the face of disruption. These aren’t separate phases, but interconnected modes of awareness.

At its core, the Compass is about balance. It’s not a static framework or a directional chart pointing north; it’s a dynamic orientation system. The red needle symbolizes continuity — always turning, always recalibrating, always learning. It reminds us that cybersecurity is not about reaching a destination, but about staying aligned while navigating constant motion.

In both cases, alignment was momentarily lost. The technology didn’t fail; it did exactly what it was designed to do. What failed was governance, the ability to anticipate, contextualize, and prevent unintended consequences. Controls existed, automation worked, and yet, those same mechanisms became the source of disruption.

This is the paradox of modern cybersecurity: our greatest strengths can quickly become our greatest vulnerabilities when we stop paying attention to the direction we’re moving in. I created the Cybersecurity Compass to bring that direction back. It connects cyber risk management, detection and response, and cyber resilience into a single, continuous feedback loop, what I now call the Continuous Defense Loop.

It’s a model where every signal, every incident, and every failure feeds back into learning and recalibration. Because resilience isn’t what happens after recovery; it’s what makes recovery possible.

In these outages, the “threat” was not external, but the absence of anticipation, the failure to see configuration risk as part of the same continuum as threat detection or incident response. The Compass reminds us that all three belong together. It’s not about predicting the future; it’s about staying oriented when the unexpected happens.

The hardest lessons in cybersecurity rarely come from attacks we couldn’t stop. They come from the incidents we could have prevented. The Compass is my way of transforming that lesson into a framework, one that helps us re-center our focus on prevention, governance, and balance.

Because in a world where the line between human error and malicious intent is increasingly blurred, true security begins with orientation. The Compass exists to remind us that cybersecurity is not only about surviving the storm.

It’s about knowing where you stand, what truly matters, and which way to turn when the system, or the world, suddenly spins.

From SOC to CROC: Learning from Silence

A traditional Security Operations Center (SOC) was built to detect and respond to adversaries. But what happens when there is no adversary, only exposure?

This is where the concept of a Cyber Risk Operations Center (CROC) becomes vital — a shift from reaction to reflection. The CROC is not an evolution of the SOC; it’s an expansion of its purpose. It monitors cyber risk data, not just threat data. It focuses on the configurations, permissions, and interdependencies that quietly shape the organization’s true security posture.

Within the CyberRiskOps model, misconfiguration data is as valuable as telemetry from an intrusion detection system. Because the presence of a misconfiguration is not just an operational oversight, it’s a quantifiable element of cyber risk.

It’s the signal that tells you where your next outage, or your next breach, might begin.To use security controls effectively, you must not only have them and use them, you must use them well. And “well” means configured with precision, aligned with governance, and continuously monitored for drift. This is the philosophy that connects the Cybersecurity Compass, CROM, and CROC Levels, transforming isolated defense mechanisms into a living, continuous feedback loop between cyber risk and cyber resilience.

The Deeper Lesson

The twin outages of October 2025 will be remembered as more than technical events. They are symbols, warnings from within the cloud. They show us that in the age of automation, our systems no longer fail because of attackers alone, but because of their own complexity.

Cyber risk today is not only about who is targeting us, but also about how we build, deploy, and govern the systems we rely upon. Our resilience depends not on the absence of attacks, but on the strength of our design, our discipline, and our humility. The greatest vulnerability in cybersecurity has never been technology, it has always been overconfidence.

We assume that automation means control, that redundancy means resilience, that scale means safety. But these outages remind us that scale magnifies both power and fragility. Cyber Resilience begins when we accept that failure is inevitable, but collapse is preventable.

And that the path to true security no longer runs through detection, but through configuration, through understanding what we have built, how it behaves, and how it fails. Because misconfigurations don’t just cause outages; they create openings. We noticed these events because they disrupted operations, because they made noise. But hundreds, even thousands of misconfigurations happen every minute, silently, across cloud environments, SaaS platforms, and automation pipelines. Most go unnoticed, living quietly in the background, until one becomes the entry point for something far worse. That is the unseen surface of modern exposure: not the attacks we detect, but the conditions that invite them.

The world saw two outages. I saw two mirrors.

Each reflecting the same truth: in modern cybersecurity, the difference between safety and failure, between cyber resilience and breach, can be just one configuration away.

++++++

Castro, J. (2024). Cyber Resilience: The Learning Phase of the Cybersecurity Compass Framework. ResearchGate. https://www.researchgate.net/publication/388616312 DOI:10.13140/RG.2.2.21546.73927

Castro, J. (2025). Umbrellas, Storms, and Cyber Risk: Why Threat Management Is Not Risk Management. ResearchGate. https://www.researchgate.net/publication/396695719 DOI:10.13140/RG.2.2.24818.98240

Castro, J. (2025). Every Cyber Risk. Every Signal. Continuous Defense Loop. ResearchGate. https://www.researchgate.net/publication/396885730 DOI:10.13140/RG.2.2.30137.22882

Castro, J. (2025). What Is Governance in Cybersecurity?. ResearchGate. https://www.researchgate.net/publication/393065290 DOI:10.13140/RG.2.2.30988.63360

Castro, J. (2025). The Cybersecurity Compass: Strategically Navigating the Stormy Digital Ocean. ResearchGate. https://www.researchgate.net/publication/389628862 DOI:10.13140/RG.2.2.15582.55366

Castro, J. (2024). From Reactive to Proactive: The Critical Need for a Cyber Risk Operations Center (CROC). ResearchGate. https://www.researchgate.net/publication/388194441 DOI:10.13140/RG.2.2.27408.93445/1

Castro, J. (2025). Cyber Risk Operational Model (CROM): From Static Risk Mapping to Proactive Cyber Risk Operations. ResearchGate. https://www.researchgate.net/publication/390490235 DOI:10.13140/RG.2.2.15956.92801

Hey, great read as always. Your take on the 'unintentional attack' is spot on and a bit terifying. How do we even begin to truly secure against our own system's complexity?